It is important to understand that the prospects of limiting the permanent environmental consequences brought on by global warming comes down to a combination of technological intervention, commonly referred to as geo-engineering, and significant reductions in global emissions of greenhouse gases (GHGs), especially CO2. The answer is not one or the other, for neither geo-engineering nor emission reduction can stand on its own as a solution. The rational behind such an assertion is that technology can only go so far in neutralizing or even masking the effects of global warming and only within a certain range of temperatures, continuing emissions would either eventually eclipse any form of technological intervention or adaptation or bankrupt the world. With regards to the necessity of geo-engineering, unfortunately the current and future influence of CO2 and other GHGs on the Earth’s temperature have left little reason to believe that existing natural carbon sinks will limit permanent detrimental effects brought on by rapid temperature and oceanic acidity increases.

As of late 2008 the concentration of CO2 in the atmosphere was 385-386 ppm.1 Current annual global emissions are estimated to be between 28 GtCO2 to 33 GtCO2 increasing 1.8% annually. Emitting approximately 7.8 GtCO2 (2.123 Gt carbon) increases the concentration of CO2 in the atmosphere by 1 ppm.2 The IPCC (2007) estimates that oceanic carbon sinks annually absorb approximately 8.056 GtCO2 and land based carbon sinks take in another 3.30 GtCO2.3 Unfortunately these estimates come with significant ranges of standard deviation where the total absorption can be anywhere from 50% lower to 50% higher than the above values.3 Taking all this information into account, the total concentration of CO2 in the atmosphere increases by approximately 2.133 to 2.775 ppm per year when just considering the influence of CO2, not other GHGs to estimate a CO2 equivalency value. With the prospects of emissions increases to almost 44 GtCO2 in the next 20 years, such a significant increase is not favorable especially when considering that there are a number of individuals that regard a concentration of 350 ppm as the threshold for environmental maintenance and safety.1 Yet, even with this concentration as a general safety point, most environmentalists believe, under current conditions, sustaining a concentration of 400 ppm to be a sufficient goal.

Suppose the world actually gets its act together and starts significantly reducing emissions, not just talking about it in all of these glorious goals for emission reduction conferences, most of which are fairly unrealistic right now. Barring the development of an incredible new energy source, such as economically viable hot fusion, at this time based on current behavior it is realistic to expect a maximum reduction of 50% in global emissions from current emission levels over the next 40 years. For simplicity assume that this reduction occurs in a linear fashion. The table below illustrates the change in atmospheric CO2 concentration leading up to 2050.

After viewing the final concentrations at 2050 one could conclude that those values are not bad at all, all things considered. Unfortunately these values represent a possibility that is still improbable. Why is the above scenario improbable? First, in the above scenario the emission reductions occur right away in 2010, a result that is unlikely in reality despite the potential passage of the Waxman-Markey bill in United States, which was previously discussed. Currently no climate legislation is even up for discussion in China and India, two large polluters in their own right. Second, even though the rate of increase in atmospheric CO2 concentration slows, it is still increasing despite reducing current emissions by 50%. Third, this example only focuses on examining CO2, not other greenhouse gases that also influence temperature change and does not take into consideration any positive feedback forcing that would occur due to rising temperatures, permafrost thawing or melting arctic ice.

Fourth, this example assumes that the capacity for CO2 in all naturally occurring sinks will remain constant. It is highly unlikely that this assumption will be accurate. However, whether the absorbing capacity will increase or decrease is a point of contention. Some believe that the accelerated growth of trees due to the increased atmospheric concentration of CO2 and warmer climate will increase land sink capacity. Unfortunately it is highly probable that the overall rate of change in growth would be less than the proportion of CO2 required for the growth enhancement because although CO2 may be the limiting factor in most cases of photosynthesis, it is not a considerable stopgap. For example increasing the atmospheric CO2 concentration by 3% may increase tree growth and resultant CO2 absorbance by 0.5%, but not 5%. If this is the case, any increase in growth will be significantly lower than what is needed to offset the CO2 released into the atmosphere to generate that growth. Even if this increase proves to be true, prognosticating the increase is remarkably difficult due to the uncertainty surrounding the number of new trees planted, their lifespan, the amount of prevented deforestation, etc.

Unfortunately it seems much more probable that the capacity of natural sinks will decrease with time if atmospheric CO2 concentrations remain high. Continued deforestation and current agricultural practices will reduce available land sinks while acidification of oceans will reduce oceanic sinks. The ocean sinks in particular are rather tricky because of the uncertainty about the relationship between different sequestering and releasing elements.

When CO2 dissolves in water carbonate ion concentrations drop leading to an increase in ocean acidity. In fact CO2 absorption has reduced surface pH by approximately 0.1 in the last decade after over 100 million years of steady decrease in acidity.4,5,6 Carbonate becomes thermodynamically less stable as oceanic acidity levels increase, in turn increasing the metabolic cost to organisms when constructing carbonate-based infrastructure (shells and skeletons). In fact the Southern Ocean near Antarctica is already experiencing significant warming far beyond anywhere else in the world and the increased acidification is having a negative influence on the ability of G. bulloides to build their shell.7 Similar results in calcification rates have also been seen in the Arabian Sea for other similar carbonate shell builders.8

The rate of calcium carbonate precipitation is an important element in determining the sink capacity of the ocean because calcium carbonate has a tendency to be removed through gravitational settling.6 Considering this removal due to calcium carbonate precipitation is important because despite the total sum of dissolved carbon species (DIC) decreasing, the remaining carbon shifts its balance in favor of pure CO2 (aq) increasing the higher partial pressure of CO2 in the ocean.6 The reason for the shift is the loss of CO3 which drives the aqueous carbonate equilibrium reaction [CO2 (aq) + CO32- + H2O ↔ 2HCO3] to the left to compensate.6 However, a dissolution of calcium carbonate results in an opposite shift reducing oceanic concentration of CO2 enhancing atmospheric CO2 acquisition. Basically precipitation of carbonate carbon reduces CO2 uptake from the atmosphere whereas dissolution of carbonate carbon increases CO2 uptake from the atmosphere.

However, a second factor must be considered, the interaction and association between particulate organic carbon and calcium carbonate concentration shifts.9,10 A decrease in calcium carbonate reduces the rate and effectiveness of moving particulate organic carbon to deeper waters, thus weakening the biological pump portion of oceanic CO2 absorption method.6 This result reduces the total CO2 sink capacity of biological denizens of the ocean like phytoplankton.11,12 So an impasse exists in that does decreasing the concentration of calcium carbonate increase oceanic sink capacity or decrease oceanic sink capacity? Currently there is no good answer to that question. However, even if sink capacity is increased, that means that ocean acidity will increase ruining the life sustaining ability of the ocean.

Another significant factor of change could be melting arctic ice. The additional liquid volume provided by melting could possibly increase the ability of the ocean to absorb CO2, but any increase in capacity would only be a very small percentage of the total existing capacity and could also be rendered moot in the context of warming because of the reduced albedo from the lack of white ice. In fact some groups have pointed out that the rapid acceleration of arctic ice loss places a significant hurtle to reducing surface temperature, one that may not be addressed by simply reducing CO2 emissions.13

From a land sink perspective, planting new trees and other flora would indeed increase overall CO2 absorption, but first deforestation practices would have to end. Also with a world population that continues to increase, finding areas in which to plant these trees would be difficult. Most of the current deforestation is the result of clearing landmass for agricultural purposes such as food and/or bio-fuel production. Competitive elements in land use is a problem due to the need to continue to feed a growing population that wants significant choice in food consumption, not just grains and vegetables, as well as devotion to a growing demand for bio-fuel and biomass energy. With these two dueling elements, planting new trees seems to be a distant third. Even if planting trees were first on the list for land use, additional absorption from trees would more than likely be far too slow to ward off significant detrimental climate change. Unfortunately speed is an issue, something trees do not do well; they do it by volume not efficiency and apparently volume seems out of the question. Adding to all of that, there is evidence that natural sinks both terrestrial and oceanic have declined since the 1990s.14 Overall it is probably unrealistic to expect an increase in absorption capacity for natural sinks and reasonable to expect a greater than even chance that capacity will decrease over time.

Even if natural carbon-sink capacity remains constant, it is not acceptable to allow atmospheric CO2 levels to persist at 400 ppm or higher without taking action. Any real interventional action can be categorized one of two ways. The first category focuses on reducing the amount or absorbance quality of sunlight striking the Earth. The second category focuses on reducing the amount of CO2 that is already in the atmosphere. Both strategies aim to reduce the overall temperature of the Earth, but the difference in strategy is considerable. Note that as previously mentioned, in addition to these efforts, the global community will also have to engage in strategies to reduce the amount of CO2 and other GHGs released into the atmosphere.

One of the most popular options in this first category is seeding the atmosphere with significant quantities of sulfur dioxide or a sulfur dioxide-similar compound. The basis of this strategy is rooted in the explosion of Mount Pinatubo on June 15, 1991 and the resultant release of 20 million tons of sulfur dioxide, which lowered the cumulative temperature of the Earth by 0.5 oC over the period of a year.15 This strategy is attractive because of its low cost and it can be administered with current existing technology.

Unfortunately there are some significant concerns with this solution. First, the sulfur dioxide will not remain in the atmosphere for more than three to four months, thus concentrations would need to be replenished at a quarterly rate. Second, the sulfur dioxide would significantly compromise the ozone layer increasing the probability that higher numbers of individuals would suffer from skin cancer and other UV-related conditions. Third, when the sulfur dioxide falls from the atmosphere there is reason to believe that it would have delaterious effects on both the ocean and land masses. Fourth, the significant reduction of sunlight to the Earth’s surface would have a negative influence on solar power installations, a significant concern in that solar power is thought to be one of the chief power sources in an energy environment that does not rely on fossil fuels. Fifth, there are some quesitons regarding how effective this method would actually be in that a significant amount of sulfur dioxide did not reach the atmosphere during the eruption of Mount Pinatubo and the drop in temperature could have been more of a localized effect than a global effect. Thus estimates of the amount of sulfur dioxide required to lower the Earth’s temperature globally a certain amount over a specific time period could be inaccurate. Sixth, although the technology already exists to release large quantities of sulfur dioxide into the atmosphere, the political structure does not. No protocol exists for who would actually disperse the sulfur dioxide, who would maintain it and who would accept responsibility for any damages accrued during its administration.

So the simplest solution in category one still has some difficult questions that need to be answered. Another option that has received attention is the distribution of various space-based mirrors or other covering material, usually at Lagrange Point 1 or 2, which would reflect a specific percentage of sunlight before it even reaches Earth leading to a reduction in temperatures. Unlike launching sulfur dioxide, the space mirror idea is immediately saddled with significant economic viability questions as well as technology questions. Although some technology hurdles for this solution have been overcome, notably due to the work of Roger Angel and his associates at the University of Arizona, these advances have not reduced the overall price of installing and maintaining the mirror.16 A large part of the costs for this strategy is derived from launching the mirror sections into space. The development of a secondary and much cheaper means to launch objects from Earth into Near Earth Orbit would go a long way to making a space mirror system more economically viable. Unfortunately other problems persist, most notably maintenance issues. Repairing any portion of the mirror that is damaged would prove to be a difficult endeavor and a costly one both financially and environmentally because a certain percentage of sunlight would no longer be reflected elsewhere. Overall by the time a space mirror becomes economically viable and operational, too much permanent damage will have been done to the environment making the solution moot.

Although there are other options in the first category, they will not be discussed due to similarity with the two above options or improbability. Basically the first category can be summed up as an attempt to treat the symptoms or negative outcomes of a condition, but not the condition itself. Redirecting the light from the sun to another location may in fact reduce surface and air temperatures, but does nothing to reduce the concentration of CO2 and other GHGs in the atmosphere. These strategies can be equated to a patient in the hospital that is bleeding profusely and instead of taking the patient into surgery to stop the bleeding, the treatment focuses on replacing the lost blood for as long as possible until the patient heals him/herself. There in lies the problem with sunlight blocking methods, they do not solve the problem just seek to delay its consequences. With the continuing build-up of CO2 what happens when a section of the solar mirror breaks or wind patterns shift unexpectedly and disturb the distribution of the sulfur dioxide in the air? Basically these strategies need to be maintained without fail for as long as CO2 concentrations exceed safe levels, which look to be decades, if not centuries.

Similar to the first category, the second category of possible solutions has a solution that is viewed to be economically and technologically viable right at this moment. Ocean fertilization involves seeding swatches of the ocean with iron leading to rapid phytoplankton growth, which would then absorb CO2 from the ocean for use in photosynthesis. With decreasing concentrations of CO2 in the ocean, the ocean will be able to absorb more CO2 from the atmosphere, reducing atmospheric CO2 concentrations. Later on when these phytoplankton die they would sink to the bottom of the ocean, effectively removing the CO2 from the short-term carbon cycle. Proponents of ocean fertilization believe it to be a cheap, easy and effective means of controlling global warming that is in a similar vein to planting trees only much faster and cheaper. However, similar to launching sulfur dioxide into the atmosphere, there are some concerns about ocean fertilization.

The biggest concern is the potential ecological damage generated by cultivating an extremely large amount of phytoplankton to a region that is not prepared to receive it. One continuing problem in the industrialized world is the expansion of hypoxic areas in bodies of water. These areas have been labeled ‘dead-zones’ and they frequently come about due to large concentrations of fertilizer run-off, which fosters blooms of algae and phytoplankton growth. These blooms eventually die sinking to the bottom of the body of water where they are broken down by bacteria, which begin to multiply rapidly consuming larger than normal quantities of oxygen stripping the region of a significant concentration of oxygen. There is reason to believe that a similar situation will occur when artificially stimulating the rapid growth of phytoplankton for CO2 absorption. Empirically no ‘dead-zone’ have been generated from past fertilization experiments, but the time of experimentation was short enough that it is impossible to rule out the possibility because one imagines a significant portion of time would be required to create a hypoxic region in the ocean with pre-existing sufficient levels of oxygen, more time than just a couple of months. Also the shorter time frame may explain some of the ‘less than encouraging’ absorption ability of the blooms in these experiments.

There are other problems including a significant reduction of sunlight over the area encompassing the blooms, which would negatively affect coral reefs and other sub-aquatic plant life. Also the potential exists to exacerbate global warming due to increased release rates of methane and nitrous oxide from the blooms and resultant bacteria growth. In addition fertilization programs are a logistics nightmare because the added iron quickly integrates into the ocean eliminating any real ability to distinguish it from non-iron supplemented water unless a tracker is utilized as well. Blooms must be continually monitored so any problems can be quickly engaged and neutralized.

It is difficult to postulate whether or not fertilizing with iron will result in similar dead-zones due to the prescribed phytoplankton blooms. However, it seems probable that one of two results will occur, either a dead-zone will be created or the resultant number of phytoplankton will not be large enough to significantly reduce the probability for climate change. Dead-zones seem probable because the defining characteristic of a dead-zone is large unnatural phytoplankton and/or algae blooms, not a specific method which results in the creation of those blooms. If fertilization does create dead-zones then it becomes a very difficult question whether or not to utilize iron fertilization as a viable method for climate control.

The joint Germany, India and Chile LOHAFEX project seems to support the position that the influence of iron fertilization is currently rather weak because it operates under more strict conditions than previous anticipated increasing the probability that it will more likely not be part of the solution for effective removal of atmospheric CO2.17,18 LOHAFEX entailed spreading 6 tons of iron sulfate over 300 square kilometers of ocean in the Southwest Atlantic Sector of the Southern Ocean within the core of an eddy and observed the results over a 39 day period. Initially the additional iron stimulated phytoplankton growth doubling their total biomass in the first 14 days due to CO2 absorption.17 However, growth was stymied by an increase in predation by zooplankton and amphipods. Increased predation is a significant negative because it reduces the amount of carbon removed from the surface layer, which in turn reduces the overall effectiveness of the fertilization. When thinking about it logically the increase in predation is not surprising due to the strict controls nature applies to limit rampant biosphere alterations. One bright spot may be the lack of dead-zone formation, but that result comes with two caveats. First, the time frame of the investigation may not have been large enough to nurture dead-zone generation. Second, there were no significant changes in bacteria concentrations between the area inside the fertilization and outside the fertilization region largely because of the significant increase in predation.

The reason that LOHAFEX failed where other fertilization investigation succeeded is in the type of plankton that bloomed. The successful experiments created blooms of diatoms, algae that use silicic acid to generate silica shells to protect against grazers.17 Unfortunately, there are not significant enough quantities of silicic acid in the Southern Ocean eliminating the ability to fertilize diatoms. The LOHAFEX study seems to eliminate the Southern Ocean as an environment for fertilization-based CO2 removal under standard protocols by identifying additional elements that may be required for any level of success. The potential loss of the Southern Ocean is significant because fertilization in warmer tropical regions have significant problems increasing CO2 absorption because of nutrition over-consumption due to pre-existing phytoplankton and creating blooms in more temperate regions is difficult due to less available nutrients. The Southern Ocean is a ‘best of both worlds’ type environment (large area, relatively low natural plankton growth and high nutrient content).

Another method available is directly removing CO2 from the atmosphere using technology, not nature. The technological removal of atmospheric CO2 is referred to as ‘air capture’. Air capture in some context really should not be viewed as geo-engineering because technically no meaningful change is being applied to nature. Instead it can be viewed as human correcting past mistakes by removing the CO2 previously emitted. Early on air capture was disparaged because many believed it would be too difficult to actually capture CO2 directly from the air due to the extremely low CO2 partial pressure and perceived unfavorable thermodynamics. However, this concern has proven to be more troublesome in theory than in practice. Despite the process being possible, air capture still has significant economic and energetic hurtles to surpass.

Clearly removing CO2 from the atmosphere should be the primary goal, but air capture is tricky because there is no significant market for the captured CO2, so the price of operation needs to be generally affordable before any private firm or government would get involved. Pricing air capture is probably the most controversial subject surrounding air capture. Most air capture proponents, with one notable exception which will be addressed directly later, state that although air capture currently has an average price around $500 per absorbed ton of CO2, this price will drop significantly over time, to something like $100-200 per ton, in similar fashion to most technology. One group Global Research Technologies (GRT) and its spokesperson, Klaus Lackner, claim that their air capture device already operates at a price point of $100 per ton and with time that operating cost will drop to under $30 per ton.19 So why are these estimates controversial?

Putting GRT and Klaus Lackner on hold for a moment, most air capture systems capture CO2 from the air via interaction with an alkaline NaOH solution which has a thermodynamically favorable reaction with CO2 resulting in the formation of dissolved sodium carbonate and water. The carbonate then reacts with calcium hydroxide (Ca(OH)2)) resulting in the generation of calcite (CaCO3) and reformation of the sodium hydroxide. This process of causticization transfers a vast majority of the carbonate ions (»94-95%) from the sodium to the calcium cation and the calcium carbonate precipitate is thermally decomposed to regenerate the previously absorbed gaseous CO2. The final step involves thermal decomposition of the calcite in the presence of oxygen along with the hydration of lime (CaO) to recycle the calcium hydroxide.20 This complete process is illustrated in the below figure.20

Basically there is little difference in the overall process regardless of how you design the capture system. There are differences in execution in that some choose to utilize a spray tower to initiate contacting (the interaction between the CO2 and the sodium hydroxide) where others use large fan-like blades and others still use other systems. Despite these differences, the reaction scheme remains similar. Therefore, it is difficult to presume how costs can be cut by 60-80% by simple changes in efficiency schemes. Instead if costs are going to be lowered by the rate at which most air capture prognosticators predict a new interaction, step or chemical will have to be subtracted or introduced.

Mr. Lackner and GRT believe that their proprietary resin, which is used in place of NaOH, is the new technology that significantly reduces price, hence their much lower cost estimates than other air capture designers. However, GRT and Mr. Lackner have yet to release any published data regarding the interaction, efficiency or any other functionality from any capture experiments. Nor are any specifics about any energetics involving their system discussed in any press releases or news stories. Without that information, it appears that the air capture system design is similar in all aspects to one utilizing NaOH with the exception of the initial binding step. In fact all GRT and Mr. Lackner have done communicating information about their air capture system is put out some press releases and conduct interviews proclaiming the greatness and cheapness of their system. The ‘technical literature’ section of the GRT website has been ‘coming soon’ for over two years.19 So until they release some actual data in a legitimate peer reviewed journal, any claims made by GRT or Mr. Lackner must be taken with a grain of salt.

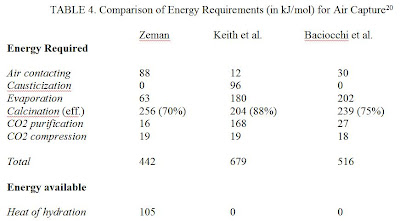

With the cost per ton of CO2 absorbed being so important, it is reasonable to calculate the costs with the information currently available about air capture systems. From “Energy and Material Balance of CO2 Capture from Ambient Air” by Frank Zeman the energy requirements to capture one mole of CO2 are summarized for three of the most promising air capture schemes.

Using this energy information from Zeman and drawing information from the EIA regarding energy generation from coal and national gas combustion, a cost structure for each air capture system can be generated. It is worthy of note that currently no air capture system has been constructed to scale and operated over a significantly long time period, so realistically these cost estimates side towards a more optimal outcome.

From the EIA coal with a carbon content of 78 percent and a heating value of 14,000 Btu per pound emits about 204.3 pounds of CO2 per million Btu when completely burned. Complete combustion of 1 short ton (2,000 pounds) of this coal will generate about 5,720 pounds (2.86 short tons) of CO2 and that coal produces »4.103 kW-h per lb of coal burned, thus typical coal-based power generates about 1.435 kW-h per lb of CO2 released.21 Factoring in an efficiency of energy use of »35% generates a value of 0.502 kW-h per lb of CO2 released. With this energy/emission ratio, coal is not an efficient enough energy provider to power air capture. Nor is using the 2007 grid [0.8858 kW-h per lb of CO2 released] a valid selection. Therefore, the approximate 1.255 kW-h per lb of CO2 released generated by natural gas will be utilized in the analysis.

Looking at Zeman first, the system design includes a step that redirects heat from the exothermic hydration reaction to a heat exchanger to generate a steam loop. This redirection supposedly reduces the overall energy requirements, thus reducing cost. So calculating the cost for this scheme will be conducted two ways, one including 100% efficiency for the hydration reaction and one excluding it entirely as it has yet to be successfully demonstrated on an industrial level.

First, the overall efficiency of the system is calculated. Efficiency is defined as how much CO2 is emitted to power the particular air capture system versus how much CO2 is absorbed by the particular air capture system. The calculations below represent Zeman hypothesized energetics with 100% efficiency.

(442 kJ/mol – 105 kJ/mol) = 337 kJ required per mol of CO2 captured = 0.093611 kW-h/mol = 0.0021275 kW-h/gm = 0.96503 kW-h/lb of CO2 captured, so 0.96503 kW-h/lb CO2 captured/1.255 kW-h/lb CO2 emitted = 0.76895 lb of CO2 emitted for every 1 lb of CO2 captured.

Unfortunately electricity costs differ significantly depending on where the system is constructed. The New England states typically have values of 15-19 cents per kW-h where Midwestern states, with the exception of Illinois, rarely crest 10 cents per kW-h. Using the national average of 9.1 cents per kW-h,22 the cost of capturing one ton of CO2 is calculated to be:

0.091 dollars/kW-h * 0.96503 kW-h/lb of CO2 captured * 2000 lb/ton = $175.635 per gross ton CO2 captured; however, with each lb of CO2 captured, 0.76895 lb of CO2 is emitted, therefore, every net lb of CO2 captured the cost would be $175.635/0.23105 = $760.16 per net ton of CO2 captured.

The costs per net ton of CO2 absorbed for all of the other systems are –

Zeman with no hydration heat utilized – Net Emission exceeds Net Capture; [1.268 kW-h/lb CO2 captured / 1.255 kW-h/lb CO2 released = 1.01];

Baciocchi et al – Net Emission exceeds Net Capture; [1.481 kW-h/lb CO2 capture / 1.255 kW-h/lb CO2 capture = 1.18];

Keith et al – Net Emission exceeds Net Capture; [1.948 kW-h/lb CO2 captured / 1.255 kW-h/lb CO2 released = 1.55];

However, Keith et al. now plan on using titanate in the process due to a discovered lower heat requirement, lowing the energy and overall costs.23,24 However, this reduction does not seem to change the energy dynamics enough to make the process carbon negative.

So the cheapest air capture system that currently has published data for evaluation has a cost of $760.16 per net ton of CO2 removed from the atmosphere, higher than the previously estimated $500 per ton of CO2 removed estimates. Why is the actual cost higher than the current estimates? Overall it is probable that previous estimates did not include the efficiency term, instead just assuming that power requirements for system operation involved no carbon emissions. Basically estimates are using a gross ton of captured CO2 as a net ton. This assumption is actually a valid one if solar power or another zero-emission energy source is utilized, but currently it is unrealistic to assume that the use of solar power would reduce costs to the low hundreds of dollars per net ton of captured CO2 because of all of the high costs associated with solar power. In addition this analysis utilized an emission ratio derived entirely from natural gas because it is unrealistic to use alternative energy as the base and using 100% coal or the natural grid breakdown results in none of the strategies being carbon negative.

Note that the above cost expenditures are only valid if electricity is utilized to provide the energy for the entire process. However, the drying precipitate and calcination processes are thermal-based which means that they can be driven by heat that does not necessarily need to be derived from electricity. The caveat to moving away from electricity is that the new source(s) providing the heat for these thermal reactions need to be on-site and produce trace/no emissions. These requirements significantly limit the available provider options, the most viable option now is concentrated solar power. The problem with concentrated solar power is it limits the construction locations for air capture units and these units now become inconsistent due to their reliance on solar power and the lack of high quality storage batteries (although this may change in the future). Another concern is that there is inconclusive information regarding how to price the capital expenditures for the thermal infrastructure and providers, thus although one might expect a reduction in cost, it is difficult to confirm or quantify a cost reduction.

Overall although co-existing electricity and thermal providers seems like a good idea to reduce energy requirements, costs and CO2 expenditure (increasing efficiency), in the long run it may not be a practical solution. The reason is that eventually a vast majority of global electricity production will be produced by trace/no emission providers (at least it better be or it really does not matter does it), thus most of the efficiency and excessive cost problems with air capture will disappear (cost will still be an issue, just not a 900+ dollar issue). Therefore, constructing the thermal providing infrastructure for air capture units could be viewed as just a waste of capital for the long-run application of air capture. However, depending on the nature of the developed infrastructure the overall waste may not be significant enough. The biggest problem when deciding between a 100% electrical and an electrical-thermal air capture system is prognosticating when the efficiency percentages of the 100% electrical system will rival those of the electrical-thermal system.

Unfortunately for those supporting air capture, the financial costs do not end with just energy use. Although it is difficult to compute a long-term price ratio, few estimates directly reference the initial capital cost of building the air capture facility and no annual maintenance costs have been estimated, at least they are never identified as such (some estimates could be grouping all costs together in one big estimate without identifying the individual components). Before any of these costs can be estimated or computed accurately a prototype system will need to be constructed, a CO2 absorbed per time ratio will need to be empirically calculated over at least a year and a system lifespan would have to be estimated. Some have estimated various CO2 absorbed per time ratios, most notably GRT’s 1 ton of CO2 per day per air capture system19, but have yet to construct a ‘to scale’ system to verify those estimations and whether or not those absorption are gross ton or net ton. There are some reports that claim a capture rate of 90,000 kg of CO2 (99.21 tons) per day;19 however, it is difficult to determine if that is an actual current rate of capture or one hypothesized for the future.

Initial construction, maintenance and even overall energy costs are only a small problem compared to the big problem plaguing current air capture systems. This big problem is that the process requires huge amounts of water. This seems true based on the chemistry no matter how the CO2 is captured. Normally in the reaction scheme water acts as a pseudo-catalyst released when the sodium hydroxide interacts with the CO2 then absorbed in the interaction with lime to form calcium hydroxide. However, in the air capture system because it is not a closed system, a large and steady stream of atmospheric air is driven through the system, this air stream absorbs a large amount of the generated water, preventing it from being used later in the hydration reaction. Thus new water has to be utilized in the hydration reaction to complete the closed product-recycling loop. The water lost from the initial capture stage of the air capture system is largely dependent on the environmental temperature and the relative humidity of the air in that a lower temperature and higher humidity would result in lower rate of water loss. Based on current operational estimates for the capture section it is reasonable to conclude that at least 1960.80 gallons of water would be required for every gross ton of carbon absorbed (even more water per net ton due to efficiency concerns).20,25 Overall depending on the cost of water per gallon (usually anywhere from 4 to 8 cents), water replacement costs add $78.43 - $156.86 to the cost of removing 1 ton of CO2 from the air for a 100% efficient system. This water replacement cost seems to be frequently ignored when discussing air capture cost dropping to $10-30 dollars per ton.

However, water cost is not the central issue. To put it in a more real context, the amount of water required to equivalently reduce the atmospheric concentration of CO2 by 1 ppm would be 1.49 x 10^13 gallons (assuming 100% efficiency). Multiply that number by at least 100 and then suggest where all of that water is going to come from?

With all of the analysis so far regarding the cost of air capture, it would go far to demonstrate the total significance of this cost in an example. What is the total realistic cost per ton of CO2 of a single air capture device based on current analysis?

As previously mentioned, there is not very much information regarding either initial capital construction costs or maintenance costs, but any estimate would correspond to both the lifecycle and the overall operational rate of the system. Assuming a system would operate at an absorbance rate of 1 net ton a day over a period of 40 years, which includes maintenance stoppages and using optimal energy dynamics from Zeman. An estimate of $50,000 for the initial capital cost is seems reasonable. Again some have Mr. Lackner claiming a $30,000 capital cost, but the legitimacy of those claims is unclear.19 Even if the 30,000 figure was accurate, when was the last time a major construction project successfully adhered to the forecasted budget? Assume annual maintenance costs equal to 1% of the initial capital cost. Add costs for electricity and water, assuming 9.1 cents per kW-hr and 5 cents per gallon respectively, with yearly increases in the cost of electricity and water of 0.5%. Taking all of these elements together the total cost of the system over the lifespan of the capture system is shown in the table below:

* Units in metric tons; running total;

** Units in gallons;

From the information presented in the table over the course of a 40-year lifespan it will cost approximately 19.315 million dollars to capture 14,600 tons of CO2 or approximately 0.000187% of 1 ppm of CO2. To capture 1 ppm of CO2 using these estimates, it would cost approximately 10.32 trillion dollars. Frank Zeman has stated that he believes the total energy requirement to capture one mole of CO2 can be lowered to approximately 250 kJ/mol. Suppose this occurs, using the 250 kJ/mol figure in the above analysis, in 40-years it would cost approximately 8.699 million dollars to capture 14,600 tons of CO2 and cost 4.65 trillion dollars to reduce atmospheric CO2 concentrations by 1 ppm.

Such a huge cost requirement forces a return to the aforementioned problem of the lack of a market for the captured CO2; if a company cannot make or receive any money capturing CO2 a price of $1 per net ton of CO2 captured will be too expensive. One suggested option is transforming the CO2 into ‘carbon neutral’ hydrocarbon-based fuel, but the advantages of such a process are strained because it requires energy to create high purity streams of CO2 and hydrogen, energy currently derived from a fossil fuel combustion source.26 Also the fuel will eventually release its carbon load back into the atmosphere, which destroys the real purpose of air capture, permanently removing atmospheric CO2 in order to reduce atmospheric CO2 concentrations to avoid detrimental climate consequences. Perhaps if society did not have the technology required to transition away from fossil fuels for another couple of decades such an idea may be noteworthy, but that is not the case. Thus, such a closed loop carbon neutral system seems to have no benefit and only results in wasted energy.

Another option is selling the CO2 to oil companies for use in enhanced oil recovery and/or enhanced coal-bed methane recovery. Although practiced today, it is unlikely that a reasonable percentage of CO2 can be utilized in this way to cover the costs of the program. Also most environmentalists would argue that society should not continue to draw out more fossil fuels if their use would simply generate more pollution that the air capture system would have to absorb at a later time, with the only difference being no market for the captured CO2, creating once again just another closed loop system that wastes energy.

One final option for creating a market is transferring the absorbed CO2 from the air capture station to greenhouses where the CO2 is pumped into the greenhouse environment to enhance plant growth. Unfortunately the size of this market appears rather insignificant and unable to absorb a vast quantity of collected CO2.

With none of the most popular options for profitability being valid, who will bare the cost burden of employing an air capture strategy? It is difficult to imagine any private company actually undertaking an air capture program large enough to significantly reduce atmospheric CO2 and there is no point to undertaking an air capture program if it is not going to be large enough to significantly reduce atmospheric CO2. Therefore, the government has to somehow subsidize an air capture program, more than likely through a carbon tax or cap and trade system using offsets to fund the program. This subsidy cannot just come from the United States, but must come from a number of world governments. Overall at this point in time unless the government gets involved it is difficult to imagine an air capture program ever being incorporated.

Another method to remove atmospheric carbon gaining in popularity is the use of bio-char. In essence bio-char is black carbon synthesized through pyrolysis of biomass. Bio-char is effective because it is believed to be a very stable means of retaining carbon, sequestering it for hundreds to thousands of years. Of course directly testing such a phenomenon is almost impossible, but because charcoal is regarded as a specific form of bio-char and due to radioactive dating, proponents believe that similar storage properties will exist for other end products of pyrolysis from other feedstock materials.

The general scheme behind bio-char in carbon sequestering takes advantage of the natural photosynthetic cycle in most forms of flora. Plants normally release any absorbed CO2 via respiration through the course of their life or hold it until they die and release it through oxidation/decomposition after death. However, using those plants as feedstock in a pyrolysis reaction to form bio-char reduces the amount of CO2 released back into the atmosphere. Three products typically result from a pyrolytic reaction: bio-char, bio-fuel and syngas.27 Slow pyrolysis (slow heat and volatilization rates at 300-350 oC) typically results in higher bio-char yields whereas fast pyrolysis (higher heat and volatilization rates at 600-700 oC) typically results in higher bio-fuel yields.27 Therefore, bio-char has two distinct influences on the global carbon cycle, CO2 entrapment and bio-fuel production to offset fossil fuels use in the transportation sector. However, for the purposes of sequestering CO2 from the atmosphere maximum bio-char yield would be advisable. Maximum yields of bio-char typically range from 40-50% under slow pyrolysis conditions.27

Another side advantage to bio-char is that when it is integrated into soil, it increases the quality of that soil enhancing the growth rates and yields of any future crops. There is little doubt that bio-char improves the quality of soil on a general level by aiding in the supply and retention of nutrients, yet some questions remain about water retention and crop yields because of the lack of sufficient field studies.28,29,30 However, for the purpose of this analysis, the question will be how useful is bio-char as a means of carbon sequestration?

Carbon sequestration potential of bio-char as a tool to limit climate change depends largely on four separate factors: stability of bio-char in a given storage medium, total rate of change in greenhouse gas emission from feedstock sources, bio-char capacity in a given storage medium and the economic and environmental requirements in the production of bio-char.

Bio-char within the confines of specific environments have demonstrated a significant amount of stability. For example the most popular example of bio-char, Amazonian based terra preta provides support for bio-char stability with a carbon age of 6000 years.28,31 Also charcoal in the North Pacific Basin, has been shown to be hundreds of thousands to millions of years old.32

However, despite these stability studies, little work has been done regarding the oxidization potential of fire or microbial activity on black carbon. Suppose both fire and microbial activity do have marginal oxidation ability, if so then bio-char storage facilities would need to avoid forestry soils, arid regions and other high turnover regions in addition to regions that will not experience significant human disturbance.

Another issue of complexity with bio-char is its lack homogeneity. Different fractions of bio-char will decompose at different rates under different conditions.33 Various studies place half-lives for bio-char in a range from 100 years to 5000-7000 years.34 Fortunately this mixed lifespan may not be a critical issue if all of the fractions of bio-char decompose over a significantly large enough time. Therefore, bio-char proponents need to identify the minimum half-life that is appropriate for carbon sequestration and then identify what conditions will generates a half-life that equals or exceeds this target half-life in the resultant bio-char. A major concern regarding longevity studies of bio-char is the popular press frequently cites the common example of terra preta in the Amazon without identifying that possibility that accelerated bio-char synthesis may not have similar features. Overall there is still more work to be done on bio-char longevity and stability.

Almost all carbon sequestering strategies function on the premise that the negative carbon potential of the strategy will be realized in the future, not the present. Typically these strategies will generate more CO2 in the short-term then they absorb/sequester, but will absorb/sequester more CO2 in the long-term then they produce in their lifetimes. However, the rate at which this turnover occurs is important because if it will take hundreds of years for a strategy to become carbon negative then the strategy will not be useful. For bio-char the initial pyrolysis process will produce CO2 usually at about 45-55% of the total carbon content of the feedstock.27,35 Recall that bio-char is considered carbon negative because it stores carbon from feedstock that would have otherwise released its carbon content into the atmosphere after months to years of decomposition. So the speed and size of the transition from carbon positive to carbon negative for bio-char largely depends on the rate at which CO2 would have been released from the pyrolysed biomass had it not been pyrolysed.

A simple equation can be utilized to describe this issue as shown below:

CO2s = CO2d/dt – CO2e

where CO2s = CO2 Saved/Change; CO2d/dt = biomass decomposition rate; CO2e = CO2 released via pyrolysis;

Assuming a bio-char stability of at least past the pre-assigned target for bio-char sequestration, two critical factors drive the magnitude and speed of the equation. The decay half-life associated with the decomposing biomass and the amount of CO2 released in the pyrolysis process. The decay half-life is an important feature because suppose if a particular feedstock has a decay/release rate of hundreds of years, it would make little sense to char such a slow-releasing sample due to the energy requirements of the pyrolysis process. However, if the particular feedstock has a decay/release rate of 5 years, it would make sense to char the quicker-release sample. Fortunately a vast majority of the feedstock candidates are quick-release samples with the best candidate for bio-char production being a feedstock with high lignin concentrations such as husks, shells and kernels due to higher bio-char yields.27,36

Another issue that is rarely discussed by bio-char proponents is any influence that mass deposits of bio-char would have on the average albedo of the Earth. Large deposits of bio-char could darken the Earth’s surface at the point of entry, which will increase the probability of thermal absorption of sunlight at those areas and increase localized surface temperature. This change in surface albedo could neutralize some to most of the carbon sequestering benefit of bio-char.

Regarding the maximum concentration of black carbon allowable in soils, the aforementioned terra preta in the Amazon provide a useful demarcation. The ratio of soil organic carbon per hectare of terra preta can be as high as 250 Mg per hectare where up to 40% of this soil organic carbon is black carbon.35 Overall the expectation of 125 Mg per hectare as a ceiling is a rational one. The biggest concern stemming from bio-char soil concentration studies is that the observed soils and land mass were tropical; few studies have been conducted using fertile temperate soils which naturally higher soil organic carbon contents. This lack of research does make sense in that researchers exploring the ability of bio-char to increase crop yields would focus more on revitalizing poor soils rather than augmenting richer soils; however, the amount of land required to sequester the amount of CO2 required to avoid significant detrimental environmental damage even only in part, will require the use of richer soils as a storage base. This lack of soil type study is also meaningful because even if no bio-char ‘plantation’ strategy is executed, this requisite knowledge will be needed to determine whether or not farmer x with soul type y should utilize bio-char on his/her farm.

Another question is whether rate of incorporation has any significant affect on capacity. For example the terra preta in the Amazon formed over thousands of years, would soil be able to absorb these quantities over a much shorter time period, decades to centuries? With rising global populations it is important to identify the capacity ceiling for bio-char, so crop yields are not negatively affected causing food price spikes due to failed productivity.

The problem of land use is an important issue to consider for bio-char production. Proponents, like to think that a significant quantity of bio-char can be acquired from agricultural and forestry residues, but stripping these residues can significantly increase the probability of erosion damage and potentially reduce soil bacterium. Therefore, it is important to consider where the residues are coming from. Returning bio-char to the point of extraction may ease some of these effects, but it is difficult to conclude how stable the bio-char would be in these environments although it seems rational to expect normal levels of stability.

Part of the reason that biomass is proposed for synthesis of bio-char is that, similar to biomass energy production, nearly every form of biomass can be converted to bio-char. However, there are some transportation issues because unless the bio-char infrastructure results in a vast number of pyrolysis plants spread out all over the world, something some proponents would like to see, biomass will need to be transported from field to plant and emissions from the transportation medium could remove any significant benefit. In the end decisions will have to be made to maximize the effect of land use in how it will be split between bio-char production, biomass production, re-forestation and food production.

For all of the questions preceding this point, the biggest might be how much bio-char can actually be produced in a given year. Bio-char production is significantly impacted by the amount of energy generated from biomass, which is theorized to partially power the energy requirements for bio-char production. However, as mentioned previously in the energy gap post, prognosticating a total energy generation from biomass is incredibly difficult largely because most proponents of biomass tend to be too optimistic in estimating the infrastructure devoted to feedstock production. Another concern in the biomass/bio-char analysis is that few people actually price the required infrastructure when making estimates to the total energy generation potential, especially because it is highly probable that biomass co-firing rates will be negatively affected by the scrapping of various coal based power plants due to climate change policy. It is improbable to expect that there is not going to be a tipping point in biomass energy generation costs where the cost per electricity ratio will jump from 5-13 cents37 to a higher number due to the land and water requirements necessary to achieve the 100-300 EJ per year required for significant bio-char production.38

Currently it is estimated that the global potential for bio-char production is 0.6 ± 0.1 PgC per year with an extrapolation to 5.5 to 9.3 PgC per year in 2100.35 However, as discussed above, those estimates are very difficult to verify or even take seriously because of the utilization of the maximum possible upper limit. Realistically it seems rather silly to try to estimate something 90 years into the future, most people are still waiting for their flying car. Overall when considering the growth potential of bio-char the most conservative estimate of growth possible should be used. For prognostication probabilities must be appreciated over lower or upper bounds of possibility.

One of the more realistic studies regarding bio-char is located on the International Bio-char Initiative (IBI) website and looks to quantify the ability of bio-char to be an effective and significant tool in reversing atmospheric CO2 accumulation and possibly climate change. The figure below, from the IBI website, illustrates the model results.

The wedge demarcation is a reference to the famous Pacala and Socolow paper.39 The target goal supported by bio-char proponents is the annual removal of 1 Gt of carbon (3.67 Gt of CO2) from the atmosphere. Each scenario uses the reference value that 61.5 Gt of carbon per year takes place in the photosynthesis/respiration cycle. The conservative wedge estimates biomass generated from only cropping and forestry residue that has no future purpose (» 27% of the total residue). The moderate and optimistic scenarios estimate utilization of 50% and 80% of total cropping and forestry residue respectively. Unfortunately the designation ‘no future purpose’ is rather suspect as previously discussed. Each scenario assumed the use of slow pyrolysis (end production results in 40-45% bio-char) instead of fast pyrolysis (end production results in 20% bio-char).

Realistically it is highly unlikely that the optimistic plus scenario outlined in the IBI model will be achieved due to all of the additional requirements it demands, thus the best scenario that is probable would the optimistic one. Suppose that happens, overall the optimistic scenario still only removes approximately 0.3667 ppm per year, a significant value and nice bonus, but one far short of what is need to avoid significant and detrimental climate change with current and even future estimated emission patterns. Therefore, although bio-char can provide some relief, it is important to include other CO2 reduction strategies. The funny thing is that bio-char very well may save the Earth, just in a way that most proponents have not considered.

In addition to air capture and bio-char, mineral weathering has been explored as an idea to neutralize CO2 concentrations at point sources, but this interest has expanded to potentially using it as a means to draw-down existing atmospheric CO2 concentrations.

In nature magnesium-silicate minerals such as olivine (Mg2SiO4) and serpentine [Mg3Si2O5(OH)4] can react with CO2 producing magnesite (MgCO3). Wollastonite (CaSiO3) is also capable of reacting with CO2 to produce calcite (CaCO3).40 Unfortunately natural weathering is too slow to significantly reduce the rapidly accelerating CO2 concentrations that the environment is experiencing now.6,41 Investigations of the ability of these minerals to interact with CO2 occur within two different methodologies, ex situ (above ground using a chemical processing plant) and in situ (below ground using little to no chemical or mechanical alteration).40 Clearly between the methods in situ has a significant energy and economic advantage due to the lack of processing facilities and alterations to the minerals, but ex situ has the potential for a significantly higher reaction rate and percent conversion advantage. With speed becoming more and more of a factor in the reduction of atmospheric CO2 concentration, ex situ is currently a more valuable method to examine.

As previously mentioned direct carbonation of minerals like olivine and serpentine is slow, in large part due to the presence of the magnesium and depending on how much water is bound to the mineral. The initial work to accelerate the reaction rate of olivine and serpentine involved a process utilized by a familiar individual in this post, the aforementioned Klaus Lackner, which involved dissolving the mineral in question in hydrochloric acid (HCL) to produce silica and MgCl2.42,43 Although the process successfully removed the magnesium the economic costs of separating the MgCl2 from silica were significant due to gel formation and the energy required after the HCL dissolution step. The separation process involves a number of extract steps focusing primarily on crystallization and dehydration eventually generating Mg(OH)2 which is then carbonated. The reaction scheme for serpentine is shown below.44

Fortunately a more economically viable process was identified, serpentinization, using a hydrothermal fluid containing CO2 forming magnesite (MgCO3). If CO2 activity is significantly high only carbonate and silicic acid form. Clearly the advantage between methods is the lack of extraction steps to generate magnesite and the elimination of the solid liquid separation step due to lack of silica gel. The serpentinization reaction scheme is shown below.45

Supplies of olivine and serpentine may never be an issue as sizable deposits exists all over the world including significant concentrations on both the West and East Coasts of the United States.46 Therefore, the economic viability of ex situ carbonation is dependent on the reaction rate and generating a reaction completion of 90% or greater. Reaction rates are influenced by increase CO2 (aq) activity, temperature, reducing particle size, disrupting crystal structures and when applicable hydration water removal.40 Of course all of these factors involve energy and/or economic costs. Reducing particle size is especially important because olivine and serpentine react according to the shrinking-particle model and the shrinking-core model respectively.40 In the shrinking-particle model, the particle surface reacts to release magnesium into solution followed by precipitation of the produced magnesium-carbonate particles from the solution; therefore, the smaller the surface area the higher the reaction rate.40 However, it costs more and more energy to generate a smaller and smaller particle.

Recalling the importance of reaction efficiency (i.e. extent of reaction, Rx), typically acquiring a 100% reaction percentage is unrealistic. Rx is largely dependent on pretreatments and reaction conditions, with significant parameter crossover with those factors that influence reaction rates. The two most important methods for increasing Rx is reducing particle size and removing any chemically bound water.40

One of the most important factors in measuring the technical and economic viability of mineral sequestration is the ratio (RCO2) between ore mass and CO2 carbonation. Normally RCO2 assumes a 100% conversion of Fe+2, Mg and Ca and creates a baseline of mineral mass required to carbonate one unit of CO2 mass. Despite vast quantities of available minerals, regardless of which is selected, a lower RCO2 number would be better because the less mass required to sequester the same amount of CO2 would also result in reduced energy and economic costs. Olivine has the lowest RCO2 value at 1.6, followed by serpentine at 2.2 and wollastonite at 2.7.40

One of the biggest problems facing serpentine as a viable reaction candidate is the additional energy required to remove water in order to improve the reaction rate. The additional 290 to 325 kW-h/ton depending on whether the serpentine is antigorite or lizardite,40 significantly impacts the efficiency of carbon sequestering, a problem that was discussed previously for air capture strategies.

Olivine and wollastonite do not have the same problem with significant associated water hydration concentrations, thus little to no heat pretreatments need to be utilized. Most of the energy used in the preparation process for these two minerals comes from reducing surface area through grinding. It typically costs 10 – 12 kW-h/ton to reduce the particle size to 75 microns (commonly referred to as 200 mesh).40,47 At 200 mesh olivine particles typically have a Rx of 14% after one hour and 52% after three hours when saturated with CO2 (aq). The figure below illustrates the change in Rx after one hour vs. the energy utilized to decrease surface area.40

Surface area has the greatest influence on Rx and reaction rate where temperature has the second greatest influence and that influence expands to viable distribution strategies. The figure shown below demonstrates that temperatures that exceed 100 oC are preferable.40 Rx values decrease when temperatures exceed a certain level because the CO2 (aq) activity decreases and the reaction become thermodynamically less favorable. Unfortunately the very low reactivity rates at low temperatures decrease the probability of successfully using mineral sequestering strategies outside of specifically designed plants as standard air temperature and pressure are much lower than desired.

One of the more significant studies of olivine driven carbon sequestration calculated that an hourly sequestering rate of 1,100 tons of CO2 would cost 3,300 tons of olivine and 352 MW-h of energy.48 This rate would result in total sequestration of 9,636,000 gross tons of CO2 a year (0.00127 ppm) for a single plant.

In general the lowest costs for mineral based carbon sequestration were calculated at $78-81/ton for 100% pure olivine. Wollastonite ore (50% purity) also generate a competitive cost ratio of $110/ton due to its larger reactivity, but the cost was balanced by the higher RCO2 value and lower purity. Unfortunately unlike olivine and serpentine, resource supplies of wollastonite are limited reducing its ability to make a significant contribution to reducing atmospheric CO2 concentrations.

Unfortunately there are some caveats to the estimated costs. First, the study was conducted in a high-pressure environment (350 psi), which is another factor that reduces the probability of administration of mineral sequestration on a passive level in the environment. Reactions rates are considerably slower at normal atmospheric pressure levels. Second, not all of the costs associated with the removal were included; transport costs were small due to proximity (something that would not always be true in an atmospheric strategy) as well as no inclusion of capital, maintenance or other overhead costs for construction. However, despite these exclusions, the economics of specialized mineral sequestering plants are low enough to warrant further research.

However, the CO2 needs to be in an aqueous form to have a reasonable probability of initiating the reaction with the given mineral at a respectable rate. Therefore, a vast percentage of CO2 in the atmosphere will be unable to spontaneously react with any introduced minerals without pretreatment. Overall at this point in time it is difficult to consider mineral sequestration as an important methodology in the direct removal of atmospheric CO2 beyond what is performed naturally without the use of a specialized plant to increase reaction rates and extents of reaction. However, in addition to the use of specialized plants, mineral sequestration may be an important element in storage of CO2 removed from the atmosphere via another means. Commentary on the in situ methodology and its role in storage will be reserved for the future discussion about long-term carbon storage.

It appears that all major atmospheric CO2 removal options are either too limited in the amount of CO2 they will remove or too expensive. Regardless of this fact the expense must be managed because without the removal of already existing CO2, human civilization on Earth will be irrevocably changed.

Dr. James Hansen and others believe that a natural draw down of 50 ppm by 2150 would be possible by establishing a dedicated program that limits deforestation and increases the rate of reforestation along with including limited use of bio-char (slash-and-char) in favor of slash-and-burn agriculture.1 This type of draw down would only cost millions instead of the billions to trillions that more advanced technological driven removal strategies cost at this point in time. So why not pursue this strategy, why is speed so important?

The most important reason is that environmental changes are proceeding much faster than previously predicted. For example the fourth edition of the IPCC Climate Report hypothesized that by the end of the century summers would be devoid of arctic ice sheets.49 However, only three years later since the information for that report was complied, ice melt has accelerated so quickly that some scientists believe that it will only take a decade before there will be summers devoid of arctic ice.13 As previously mentioned the loss of arctic ice is not only important for the localized ecosystem, but also removing reflective white ice in favor of dark blue water will significantly increase the temperature of the oceans.

A possible reason explaining the huge difference in prognostication vs. reality is that it is highly likely that in the past there was overestimation of the negative effects of aerosols on global climate forcing due to the uncertainty of those effects.50 The general idea behind aerosols, excluding soot, is that they naturally scatter sunlight cooling the environment. Aerosols function under the same general principle as the sulfur dioxide geo-engineering idea. However, until recently there was conflicting data between climate models and satellite data where climate models predicted only a 10% reduction in warming vs. satellite data implicating a 20% reduction in warming. A new study has reconciled the discrepancy and determined that the 10% masking value is more accurate.51 With a less pronounced influence from aerosols (radioactive forcing of -0.3 Wm2 instead of –0.5 Wm2)51 it is reasonable to anticipate a faster rate of climate change than that predicted by the IPCC.

It is impossible to rationally argue that global warming is not significantly influencing the climate.52 The somewhat scary thing is how long this influence has been at work as a recent study concluded that 90% of the changes in biological systems over the past 38 years were consistent with warming trends.53 If there was a more deterministic timeline of events then it would be easier to select the most economical strategy to ward off the more detrimental aspects of climate change; however, that is not the case, which leaves available options split between greater economical advantage or greater certainty. Overall with what is at stake, it makes more sense to bet on certainty that might cost more over cost-effective that might not be successful. This statement does not mean that more natural strategies like reforestation and ‘slash-and-char’ should not be incorporated into a CO2 reduction plan of action, but that one cannot forego the more expensive technological strategies in favor of the more natural strategies.

Natural carbon sinks will be unable to offset the increase in emissions that will likely occur throughout the 21st century. Suppose for a moment that instead of a 50% reduction, the world becomes CO2 neutral by 2050 with an atmospheric concentration of CO2 topping out at » 450 ppm. Then assume only a 20% reduction in the capacity of natural sinks and negligible increases in all other GHGs. Under these very favorable conditions it would still take nature over 86 years (2136) before emission levels returned to what most view as the reasonably safety level of 350 ppm. Of course the above scenario is improbable, but even in that scenario average global temperatures will increase by and be maintained at least 2-3o C over the next 80-100 years, which will generate some significant and permanent environmental damage. Thus, technologically driven atmospheric carbon capture will be necessary despite the cost. So it is important to take steps to lower that cost as much as possible. These are just some of the steps that should be explored.

First, reducing the dependency of an air capture system on fossil fuel derived energy will be an important cost cutting step. The less emissions generated by the energy source servicing the air capture unit, the higher the efficiency of that unit. The seemingly best universal option, barring the development of some new energy source, would be to install solar panels on some wing-like addition to the basic capture design. In special cases, wind may prove to be a more reliable source than solar. Nuclear could be an option, but construction times place strains on its viability.

The use of solar power has been suggested by proponents of air capture, but the solar power needs to be generated on-site not from a separate solar energy generating infrastructure some odd miles away. For example, it is unlikely that the cost of solar power per kW-hr will ever drop below current fossil fuel energy prices, thus returning to the Zeman cost analysis, instead of the cost per net ton being $753.38 it would be the original gross ton price of $174.069 because no CO2 is being released to provide the energy of operation, but at best the general price per kW-hr from a solar energy provider would be similar (9.1 cents).

Second and more importantly, each capture unit needs to have a means to either synthesize or recapture most of the water lost during operation. Recapturing could occur by running the output atmospheric air stream from the contactor into a compressor and then filtering out the water. Lowering the temperature of the air before it enters the contactor would reduce the total amount of water loss or limiting construction of air capture units to environments with high levels of relative humidity. Unfortunately these first two strategies will require additional energy, but if that energy is provided from a zero-emission source the benefits will outweigh the disadvantages.

Third, a greater number of test-plots for exploration of bio-char need to be developed ranging in climate location and soil type. Some test-plots have been developed, but the primary goal behind those plots were to better understand the ability of bio-char to aid in crop growth, not to identify the ability of bio-char to store carbon. Note that this suggestion does not advocate creating bio-char plantations, but instead looks to identify locations where one could deposit bio-char. Also a practice plot to determine how bio-char interacts with modern-day farming techniques should also prove useful to whether or not bio-char can successfully sequester carbon over long-periods of time and aid in increasing crop yield over a period of time greater than a few years.

Overall there is still a lot of work to do when it comes to neutralizing the increasing atmospheric CO2 concentration, but continued focus on the evolution of CO2 atmospheric extraction techniques will generate the means to avert some of the more detrimental environmental results. Therefore, it is important that attention focus on not only mitigation strategies that reduce the amount of CO2 and other GHGs that are released into the atmosphere to begin with, but also on strategies that will reduce the existing concentration of CO2 at a sufficient rate.

--

1. Hansen, James, et, Al. “Target Atmospheric CO2: Where Should Humanity Aim?” The Open Atmospheric Science Journal. 2008. 2: 217-231.

2. Enting, I.G., et, Al. “Future Emissions and Concentrations of Carbon Dioxide: Key Ocean/Atmosphere/Land Analyses.” 1994. CSIRO – Division of Atmospheric Research Technical Paper #31.

3. “Working Group I: The Physical Science Basis of Climate Change.” Intergovernmental Panel on Climate Change. 2007. In: http://ipcc-wg1.ucar.edu/wg1/wg1-report.html.

4. Caldeira, K, and Wickett, M. “Anthropogenic carbon and ocean pH.” Nature. 2003. 425: 365.

5. Keeling, C, and Whorf, T. “Atmospheric CO2 records from sites in the SIO air sampling network, Trends: A Compendium of Data on Global Change.” Carbon Dioxide Information Analysis Center, Oak Ridge National Laboratory, U.S. Department of Energy, Oak Ridge, Tenn., USA, 2004. (http://cdiac.esd.ornl.gov/trends/co2/sio-mlo.htm).

6. Ridgwell, Andy, and Zeebe, Richard. “The role of the global carbonate cycle in the regulation and evolution of the Earth system.” Earth and Planetary Science Letters. 2005. 234: 299– 315.

7. Moy, Andrew, et, Al. “Reduced calcification in modern Southern Ocean planktonic foraminifera.” Nature Geoscience. 2009. 2: 276 – 280.

8. del Moel, H, et, Al. “Planktic foraminiferal shell thinning in the Arabian Sea due to anthropogenic ocean acidification?” Biogeosciences Discussions. 2009. 6(1): pp.1811-1835.

9. Armstrong, R, et, Al. “A new, mechanistic model for organic carbon fluxes in the ocean: based on the quantitative association of POC with ballast minerals.” Deep-Sea Res. 2002. Part II 49: 219–236.

10. Klaas, C, Archer, D. “Association of sinking organic matter with various types of mineral ballast in the deep sea: implications for the rain ratio.” Glob. Biogeochem Cycles. 2002. 16(4): 1116.

11. Ridgwell, Andy. “An end to the ‘rain ratio’ reign?” Geochem. Geophys. Geosyst. 2003. 4(6): 1051.

12. Barker, S, et, Al. “The Future of the Carbon Cycle: Review, Calcification response, Ballast and Feedback on Atmospheric CO2.” Philos. Trans. R. Soc. A. 2003. 361: 1977.

13. Hawkins, Richard, et, Al. “In Case of Emergency.” Climate Safety. Public Interest Research Centre. 2008.

14. Canadell, Josep, et, Al. “Contributions to accelerating atmospheric CO2 growth from economic activity, carbon intensity, and efficiency of natural sinks.” PNAS. 2007. 104(47): 18866-18870.

15. Biello, David, et, Al. “Researchers Use Volcanic Eruption as Climate Lab.” Scientific Nature. January 5, 2007.

16. Angel, Roger. “Feasibility of cooling the Earth with a cloud of small spacecraft near the inner Lagrange point (L1).” PNAS. 2006. 103(46): 17184-17189.

17. “Lohafex project provides new insights on plankton ecology: Only small amounts of atmospheric carbon dioxide fixed.” International Polar Year. March 23, 2009.

18. Black, Richard. “Setback for climate technical fix.” BBC News. March 23, 2009.

19. Various Press-Releases and News Articles from GRT Wesite: http://www.grtaircapture.com/

20. Zeman, Frank. “Energy and Material Balance of CO2 Capture from Ambient Air.” Environ. Sci. Technol. 2007. 41(21): 7558-7563.

21. Hong, B.D, and Slatick, E. R. “Carbon Dioxide Emission Factors for Coal.” Energy Information Administration, Quarterly Coal Report. January-April 1994. pp 1-8.

22. “Electric Power Industry 2007: Year in Review.” Energy Information Administration. May 2008. pg 1.

23. Mahamoudkhani, M. and Keith, D.W. “Low-Energy Sodium Hydroxide Recovery of CO2 Capture from Air.” International Journal of Greenhouse Gas Control Technologies. Pre-Print;

24. Keith, David. “Direct Capture of CO2 from the Air.” Unpublished Presentation. www.ucalgary.ca/~keith

25. Stolaroff, Joshuah, et, Al. “Carbon Dioxide Capture from Atmospheric Air Using Sodium Hydroxide Spray.” Environ. Sci. Technol. 2008. 42: 2728–2735.

26. Zeman, Frank, Keith, David. “Carbon neutral hydrocarbons.” Phil. Trans. R. Soc. A. 2008. 366: 3901–3918.

27. Amonette, Jim. “An Introduction to Biochar: Concept, Processes, Properties, and Applications.” Harvesting Clean Energy 9 Special Workshop. Billings, MT Jan 25, 2009.

28. Glaser, B, et, Al. “The Terra Preta phenomenon – A model for sustainable agriculture in the humid tropics.” Naturwissenschaften. 2001. 88: 37–41.

29. Glaser, B. Lehmann, J, Zech, W. “Ameliorating physical and chemical properties of highly weathered soils in the tropics with charcoal - a review.” Biology and Fertility of Soils. 2008. 35: 4.

30. Lehmann, J., and Rondon, M. “Bio-char soil management on highly-weathered soils in the humid tropics.” Biological Approaches to Sustainable Soil Systems. 2005. Boca

Raton, CRC Press, in press.

31. Soubies, F. “Existence of a dry period in the Brazilian Amazonia dated through soil carbon, 6000-3000 years BP.” Cah. ORSTOM, sér. Géologie. 1979. 1: 133.

32. Herring, J.R. “Charcoal fluxes into sediments of the North Pacific Ocean: the Cenozoic record of burning.” In: E.T. Sundquist and W.S. Broecker, Editors, The Carbon Cycle and Atmospheric CO2: Natural Variations, Archaean to Present, A.G.U. 1985. 419–442.

33. Hedges, et Al. “The molecularly-uncharacterized component of nonliving organic matter in natural environments”, Organic Geochemistry. 2000. 31: 945–958.

34. Preston, C, and Schmidt, M. “Black (pyrogenic) carbon: a synthesis of current knowledge and uncertainties with special consideration of boreal regions.” Biogeosciences. 2006. 3: 397–420.

35. Lehmann, J, Gaunt, J, Rondon, M. “Bio-char sequestration in terrestrial ecosystems – a review.” Mitigation and Adaptation Strategies for Global Change. 2006. 11: 403–427.

36. Johnson, Jane, et, Al. “Chemical Composition of Crop Biomass Impacts Its Decomposition.” Soil Science Society American Journal. 2007. 71: 155-162.